I recently picked up a 16GB Intel Optane drive off eBay for pennies, thinking I was in for a big win. My hope? That it would supercharge ZFS synchronous write performance when used as a SLOG (ZIL) device -especially to help out some aging SAS SSDs I also bought online. Those early-gen SSDs turned out to have pretty poor write latency, so the idea was that the Optane, known for low latency, would act as a performance crutch.

Spoiler: it didn’t help. In fact, it made things worse.

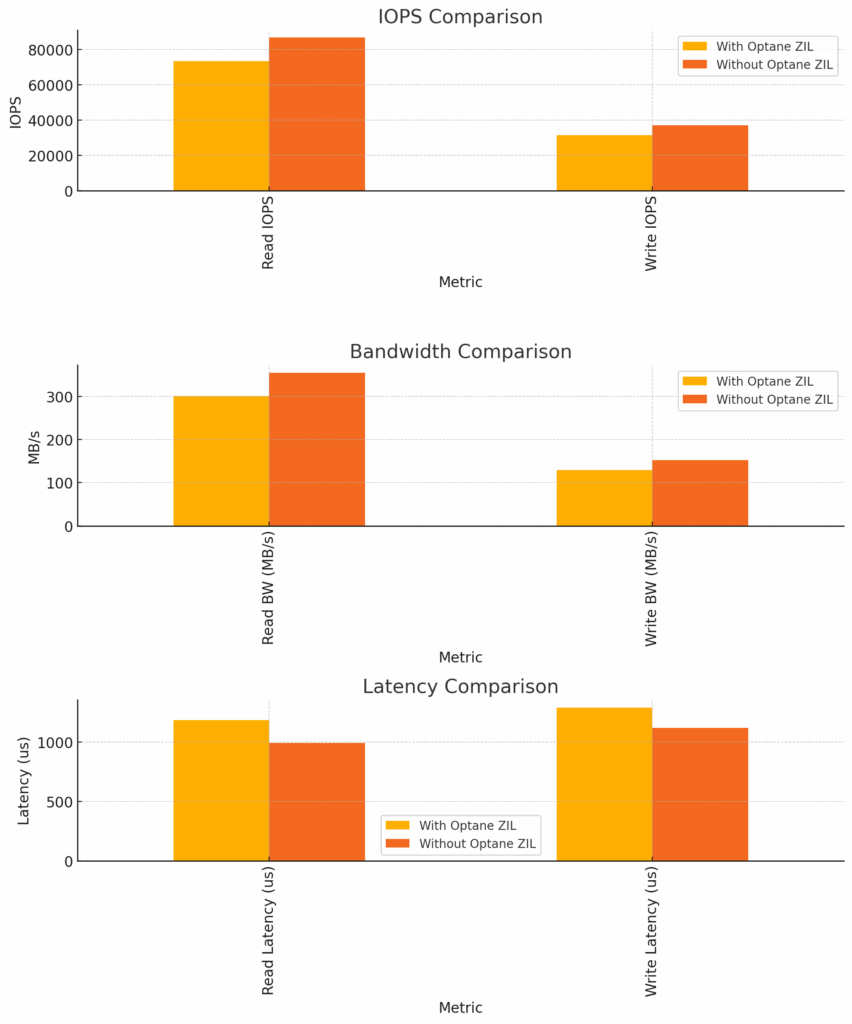

I ran FIO benchmarks from inside a VM with it’s VHD on ZFS. The results with the Optane ZIL were:

Read IOPS dropped ~15% (from 86.8k to 73.6k)

Write IOPS dropped ~15% (from 37.2k to 31.6k)

Read latency increased (from ~994μs to ~1184μs)

Write latency increased (from ~1118μs to ~1290μs)

fio --name=proxmox-zfs-test \

--directory=/your/zfs/mountpoint \

--rw=randrw \

--rwmixread=70 \

--bs=4k \

--ioengine=libaio \

--iodepth=32 \

--numjobs=4 \

--runtime=60 \

--time_based \

--group_reporting \

--direct=1 \

--filename=testfile.fio \

--size=10G

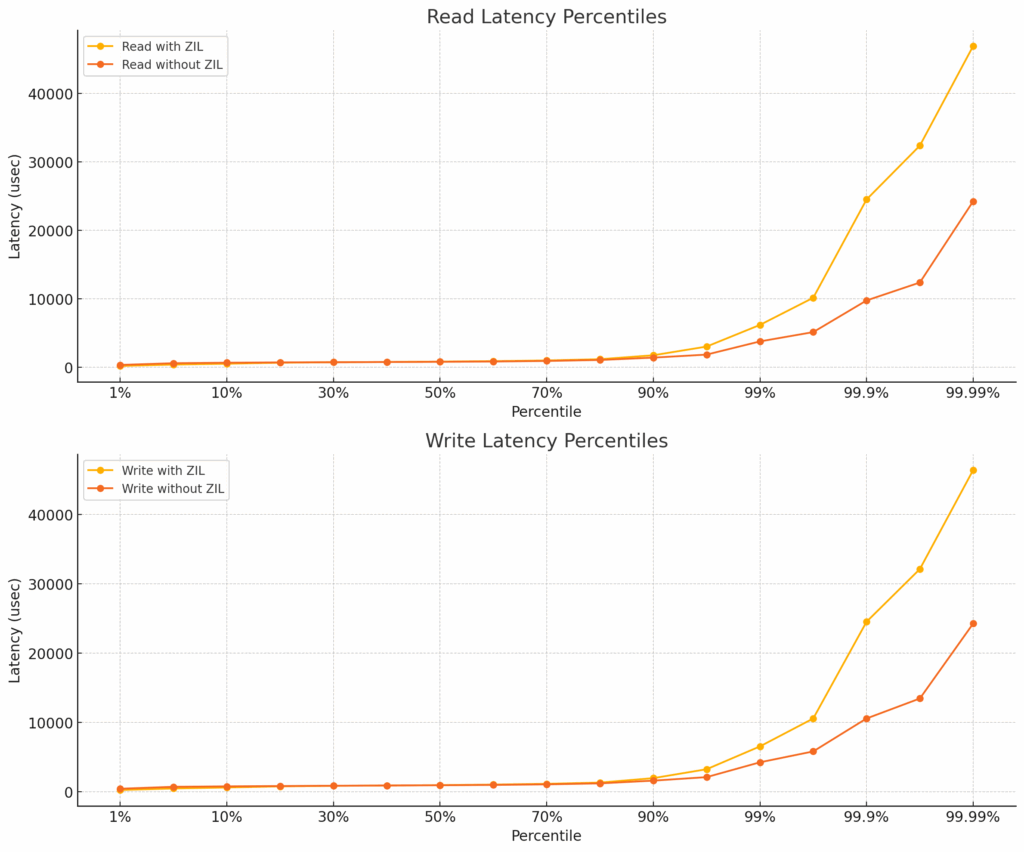

Where the pool really suffered (and where performance really diverged) was in the higher percentiles. Once the Optane drive was at high utilisation it just fell apart.

Despite its promise and low price, the tiny 16GB Optane doesn’t deliver the kind of benefits you’d expect in this role—at least not for VM workloads with heavy synchronous write activity. In fact, using no SLOG at all gave me better performance.

Lesson learned: Just because it’s cheap and has “Optane” on the label doesn’t mean it’s a magic bullet for ZFS performance.